Assignment 4: Backend Design & Implementation

Abstract Data Models

All of these were taken from here

User

User State Machine

concept User

purpose authenticate a partipicant of RealTalk

state

registered: set User

username, password: registered -> one Stringactions

register(n, p: String, out u: User)

u not in registered

registered += u

u.username := n

u.passowrd := pauthenticate(n, p: String, out u: User)

u in registered

u.username = n and u.password = poperational principle

after a user registers with a username and password, they can authenticate with that same username and password

after register(n, p, u), u in registered, u.username = n, u.password = p; authenticate(n, p, u') results in u = u'

Session

Session State Machine

concept Session [User]

purpose authenticate users for an extended period of time

state

active: set Session

curUser: active -> one Useractions

start(u: User, out s: Session)

s not in active

active += s

s.curUser = uend(s: Session)

s in active

active -= sgetUser(s: Session, out u: User)

s in active

u := s.curUseroperational principle

after a session starts until it ends, getUser returns the same user that started the session

after start(u, s) until end(s, u), getUser(s, u') results in u = u'

Friend

Friend State Machine

concept Friend [User]

purpose increase connections between others

state

friends: User -> set User

actions

friend(u1, u2: User)

u1.friends += u2

u2.friends += u1unfriend(u1, u2: User)

when u2 in u1.friends

u1.friends -= u2

when u1 in u2.friends

u2.friends -= u1areFriends(u1, u2: User, out b: Boolean)

when u2 in u1.friends or u1 in u2.friends

b := True

otherwise

b := Falseoperational principle

after a user friends another user, they are both considered each others friends until one of them unfriends the other

after friend(u1, u2) until unfriend(u1, u2), u2 in u1.friends, u1 in u2.friends, and areFriend(u1, u2, b) results in b = True

Post

Post State Machine

concept Post [User, Content]

purpose share content with others

state

posts: User -> set Post

content: Post -> one Content

author: Post -> one Useractions

post(u: User, c: Content, out p: Post)

p.content := c

p.author := u

u.posts += punpost(u: User, p: Post)

when p in u.posts u.posts -= p

forget content of p

forget author of poperational principle

after post(u, p, c) until unpost(u, p), p in u.posts, u = p.author, and c = p.content

Comment

Comment State Machine

concept Comment [Target, User, Content]

purpose react to other content

state

comments: Target -> set Content

author: Content -> one Useractions

comment(t: Target, u: User, c: Content)

t.comments += c

store u as author c is fromuncomment(t: Target, u: User, c: Content)

when the author of c is u

t.comments -= c

forget author of coperational principle

after comment(t, u, c) until uncomment(u, c), c in t.comments and u is author of c

Like

Like State Machine

concept Like [Target, User]

purpose show approval or disapproval of information

state

likes, dislikes: Target -> set User

actions

like(t: Target, u: User)

t.likes += u

dislike(t: Target, u: User)

t.dislikes += u

neutralize(t: Target, u: User)

when u in t.likes

t.likes -= u

when u in t.dislikes

t.dislikes -= uoperational principle

after like(t, u) until dislike(t, u) or neutralize(t, u), u in t.likes

after dislike(t, u) until like(t, u) or neutralize(t, u), u in t.dislikes

Trust

Trust State Machine

concept Trust [Target, User]

purpose show agreement or disagreement with the truthfulness of information

state

trusts, mistrusts: Target -> set User

actions

trust(t: Target, u: User)

t.trusts += u

mistrust(t: Target, u: User)

t.mistrusts += u

neutralize(t: Target, u: User)

when u in t.trusts

t.trusts -= u

when u in t.mistrusts

t.mistrusts -= uoperational principle

after trust(t, u) until mistrust(t, u) or neutralize(t, u), u in t.trusts

after mistrust(t, u) until trust(t, u) or neutralize(t, u), u in t.mistrusts

Karma

Karma State Machine

concept Karma [User]

purpose show how truthful a user is

state

karma: User -> one Integer

actions

increase(u: User)

when u in karma

u.karma := u.karma + 1

when u not in karma

u.karma := 1decrease(u: User)

when u in karma

u.karma := u.karma - 1

when u not in karma

u.karma := -1operational principle

after increase(u) until decrease(u), u.karma is 1 more than before

after decrease(u) until increase(u), u.karma is 1 less than before

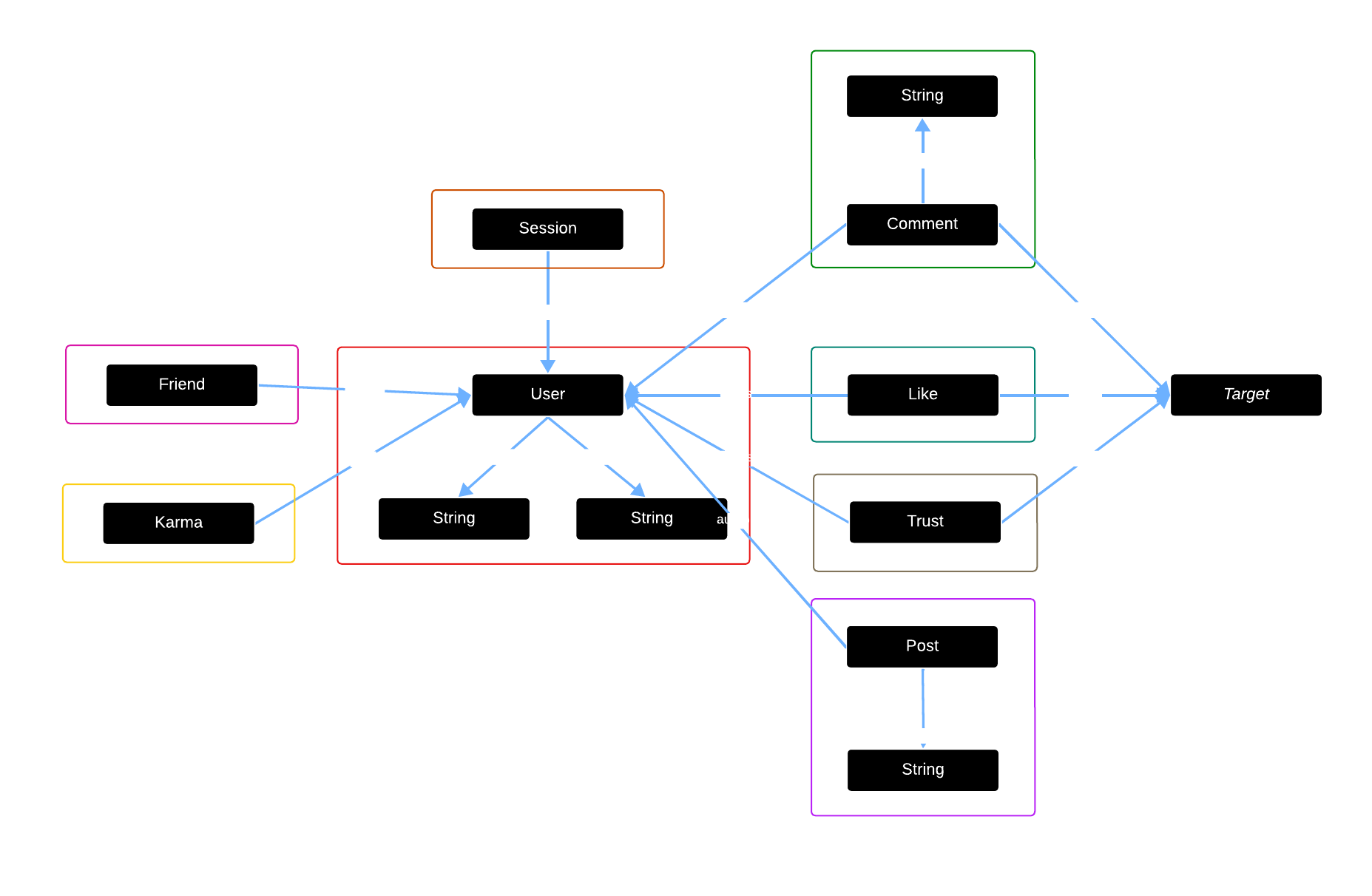

App State Diagram

Design Reflection

When designing comments, likes, and trusts, I did not consider that I would have to ensure their target existed before trying to apply the comment, like, or trust to the target. When actually implementing comments and likes, I realized I had to check to make sure a comment wasn't applied to a comment, or a like wasn't applied to a user. I did this by checking the ObjectId of the target and ensuring it existed as a post. I could have allowed commenting, liking, or trusting any target, but this could result in liking likes which is extremely strange for a social media.

When implementing the karma system dependent on trusts and mistrusts, I ran into two bugs that allowed infinite karma farming. The first bug was simply trusting over and over again. Even though an error was returned, the backend code didn't check for an error before increasing karma. This was easily fixed by checking for an error before touching karma. The second bug was more complicated. User A could trust, mistrust, and then neutral trust User B's post or comment, causing a net karma of +1. This was caused by the backend code not checking the previous trust state of a target. For example, a trust would always give +1 karma, even if the post was previously mistrusted by the same person. In that case, it should give +2 to offset the -1 from a mistrust, and vice versa for trust to mistrust.